Structured Concurrency

Index

- Structured Concurrency

- async/await

- async-let

- Tasks

- Actors

- AsyncSequence

- Preparing for Swift 6

- Testing

- See also

Structured Concurrency

Structured Concurrency is a set of features to augment concurrency in the Swift language. It provides

- async/await and async-let.

- A cooperative thread pool to run tasks with priorities, dependencies, local values, and groups.

- Sendable: A marker protocol that signifies thread-safe types.

- Actors: compiler-implemented reference types that ensure *mutual exclusion*.

- Continuations bridge traditional callbacks with new async functions.

The main difference is that Structured Concurrency uses cooperative multitasking to enforce forward progress.

Cooperative what?

- There is a cooperative thread pool where threads are taken and returned to.

- Work is divided in tasks, each of which runs on one thread taken from a pool.

- Tasks voluntarily return their thread to the pool.

- the system distributes execution time between processes,

- processes are paused and resumed without their knowledge.

An important goal of Structured Concurrency is forward progress, a state where tasks progress towards completion. This is enforced by not using blocking operations like, for instance, semaphores.

A semaphore is a blocking operation where a thread is waiting for a signal that is programmatically sent from another thread. Not sending that signal correctly may delay or stop that thread from progressing. Always treat blocking code as an island, and never mix it with structured concurrency.

async/await

An asynchronous function is one that suspends itself while waiting for a slow computation to complete. The key innovation is that suspension does not block the thread, as is typically the case with synchronous functions.

Consider the following example:

func execute(request: URLRequest) async throws -> (Data, URLResponse) {

try await URLSession.shared.data(for: request, delegate: nil)

}

let request = URLRequest(url: URL(string: "http://foo.com")!)

let (data, response) = try await execute(request: request)Two aspects are noteworthy:

- It runs a network request without a completion handler.

- The function is declared with

asyncand called withawait.

// declaration examples

func f() async { ... }

func f() async -> X { ... }

func f() async throws { ... }

func f() async throws -> X { ... }

// call examples

await f()

try await f()

let x = await f()

let x = try await f()See also:

- Example

- Why is this important?

- How does it work?

- Explicit continuations, API

- Don’t mix SC and blocking primitives

Example

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

import Foundation

// Return the first 'lines' of the given URL.

func head(url: URL, lines: Int) async throws -> String {

try await url.lines.prefix(lines).reduce("", +)

}

// Async functions can only be called from 'async contexts'

// Here, an async context is created with Task {}

Task {

let url = URL(string: "https://www.google.com")!

let firstLine = try await head(url: url, lines: 1)

print(firstLine)

}

// Wait 2 seconds for the async function to complete

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 2) as Date

)

headis an async function that calls another async function (URL.lines).- Async functions must be called from async contexts. An async context is created here using

Task {}. We’ll see tasks in a bit.

This code compiles since Xcode 13 on Big Sur, or swiftc on macOS Monterey. I prefer CodeRunner for small examples as playgrounds can be cumbersome.

Starting with Xcode 13.2 beta, deployment to previous OS versions is supported: macOS 10.15, iOS 13, tvOS 13, and watchOS 6 or newer.

Why is this important?

- Less verbose –no pyramid of doom.

- One execution path –no nesting or many paths for error/success.

- Allows async functions to return a value –prevents missed completion calls.

- Eliminates the need to avoid unintentional captures using weak/unowned.

- Offers less cognitive overhead and improved performance compared to reactive programming.

GCD can be problematic

Apple advises using one serial GCD queue per subsystem in your app. However:

- If the queue is not running, scheduled work runs without a context switch.

- If the queue is running, the calling thread is blocked. We say that the queue is under contention.

- Possible deadlocks from threads competing for the same lock.

- Overhead that may negate the benefits of asynchronous work.

- Memory overhead from the stack and kernel data structures tracking the thread.

- Scheduling overhead.

- Context-switching overhead.

This can be ameliorated by scheduling work with dispatch async, at the cost of creating a new thread to schedule the work on the serial queue. Although this requires a context switch and incurs extra overhead, it prevents blocking the thread.

In short: GCD is difficult to use and prone to overuse. Concurrency with GCD is often less efficient than employing a serial queue.

How does it work?

func get(url: URL) async throws -> String {

let (data, response) = try await URLSession.shared.data(from: URL)

return String(data: data, encoding: .utf8)!

}func get(url: URL) -> String {

let lock = os_unfair_lock_s()

var string: String!

URLSession.shared.data... {

string = ... $0

os_unfair_lock_unlock(&lock)

}

os_unfair_lock_lock(&lock)

return string

}This gets rid of the completion handler but it’s verbose and incurs overhead –besides hiding the internal async call. Instead, async functions use some magic sauce to avoid it.

When you call an async function this happens:

- The caller code is suspended and stored as a heap “async frame” –not on the stack!.

- The caller thread becomes free to execute any other async frame –it is not blocked!.

- The async function called executes on any available thread.

- When the async function finishes, the first thread available resumes executing the async frame of the caller.

Threads are not blocked, so there is no chance for deadlock or thread explosion.

Explicit continuations

import Foundation

func functionWithCompletionHandler(_ handler: (Int) -> Void) {

// Simulate a slow operation

DispatchQueue.global().asyncAfter(deadline: .now() + 1) {

handler(42) // Call the handler when done

}

}

func asyncFunction() async throws -> Int {

try await withCheckedThrowingContinuation { (continuation: CheckedContinuation<Int, Error>) in

// Register the completion handler

functionWithCompletionHandler { result in

continuation.resume(returning: result)

}

}

// Suspension happens *after* the closure exits

}

// let’s run the example above

Task {

do {

let value = try await asyncFunction()

print("Got async value:", value)

} catch {

print("Error:", error)

}

}

// When launching as a script keep the RunLoop alive to let the async code finish

RunLoop.current.run(

mode: .default,

before: Date(timeIntervalSinceNow: 2)

)- Runs the closure. This is your chance to set up the logic that will eventually call resume().

- Suspends and waits for a call to `continuation.resume()`.

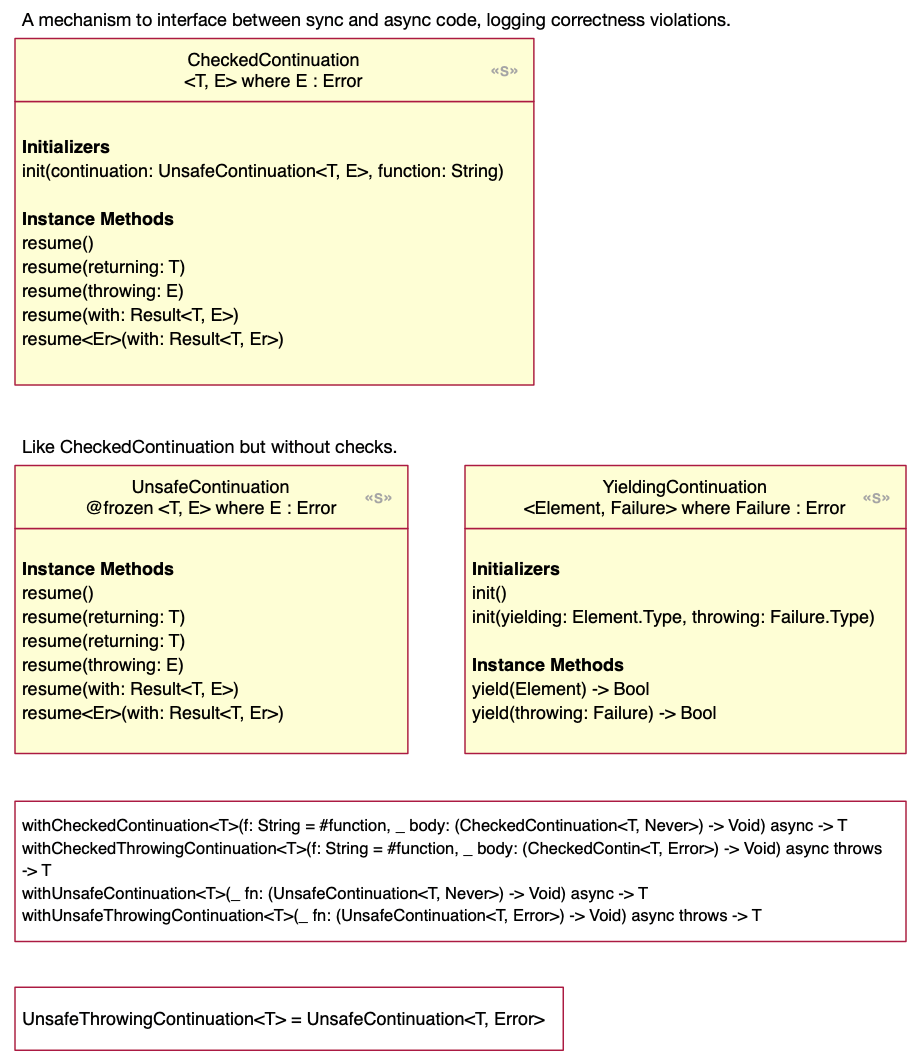

- CheckedContinuation: checks you call resume() exactly once.

- UnsafeContinuation: doesn’t check anything.

API

Don’t invoke blocking primitives from Structured Concurrency

Don’t invoke blocking primitives on a thread managed by Structured Concurrency.

- Problematic example: using semaphores inside SC. Given the unknown size of the cooperative thread pool, if semaphores block and exhaust the pool, no threads will be available for signaling.

- Problematic example #2: you spawn a Task that calls a library that uses a blocking primitive. No way to know in advance. It’s not the end of the world but results in degraded performance.

Blocking code is not legacy code. A reason to use blocking is when the overhead of asynchronous management in terms of complexity and runtime performance outweighs the benefits. Two reasons not to use blocking is that performance is only a problem when there isn’t enough, and that it is more productive to use the highest level construct available.

Example, if SC needs a I/O operation it offloads the work to the system where it may end up in a high performance low level layer. Shall we need such a thing, there are safe ways to invoke GCD from SC while ensuring each system manages their own threads.

How to integrate code with blocking primitives:

1

2

3

4

5

6

7

8

9

func performAsyncTask() async {

await withCheckedContinuation { continuation in

DispatchQueue.global(qos: .userInitiated).sync {

// (...) code with I/O, semaphores, etc.

continuation.resume()

}

}

}

This is safe because withCheckedContinuation (2) suspends the process and frees the thread. Then the code is invoked on a thread managed by the global queue (3) –not by SC. Finally, continuation.resume() (5) resumes the task.

async-let

An async-let binds the result of an async function but doesn’t await until the result is first referenced.

async let result = f()

// ... execution continues while f is executing

await print(result) // caller suspends awaiting the resultSee also: example.

Example

import Foundation

func get(url: URL) async throws -> String {

let (data, _) = try await URLSession.shared.data(from: url)

return String(data: data, encoding: .utf8)!

}

Task {

async let string = get(url: url)

print("runs immediately")

await print(try string.prefix(15).description)

}

// wait 2 seconds so the async function can finish

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 2) as Date

)This prints runs immediately followed by the first 15 characters of the page. It does not call get(url: url) until the result (string) is referenced.

Behind the scenes, async-let spawns a child Task that runs the async function and is implicitly awaited when the result is first referenced.

Tasks

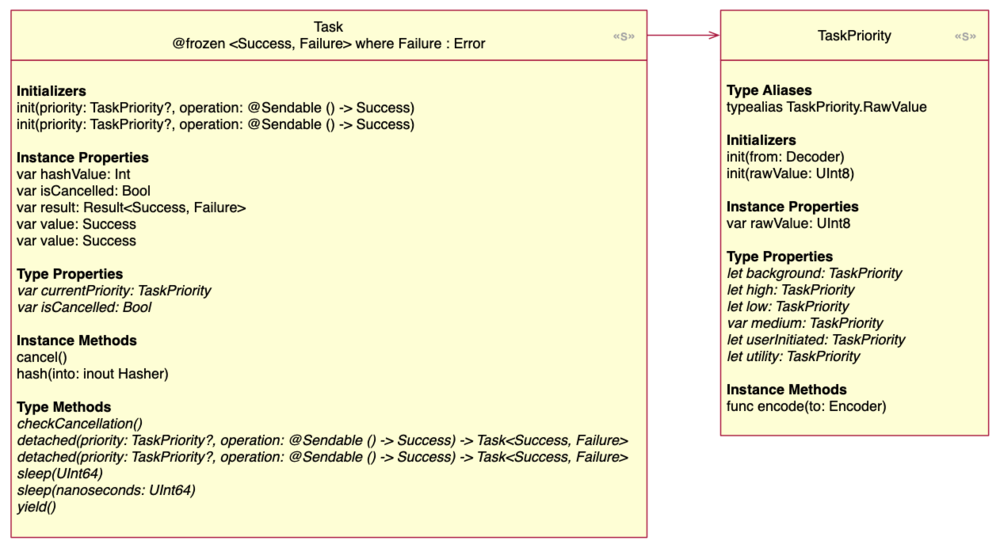

Task

A task is a unit of asynchronous work.

Task features:

- They express child-parent dependencies –this prevents priority inversion.

- They can be canceled, queried, re-prioritized.

- They store task-local values that are inherited by child tasks.

Because Task have the concept of child-parent, they are called different things in respect to each other:

Task { ... } // unstructured task

Task.detached { ... } // detached task

let parentTask = Task { // parent task

Task {} // child task

Task.detached {} // child detached task

}

parentTask.cancel() // cancels parent and child, but not the detached taskTask eats exceptions

If the code inside a Task throws an exception you won’t receive any warning. If you want to remind yourself to catch errors, you can write this instead:

typealias SafeTask<T> = Task<T, Never>

// usage

SafeTask<Void> {

// no throwing allowed here

// if anything throws you must wrap it in a do/catch

}Detached tasks

A detached task doesn’t inherit anything from the parent task

- doesn’t inherit priorities

- doesn’t inherit local task storage

- it’s not canceled when the parent is

A detached task completes even when there are no references pointing to it.

See also:

- Why do tasks exist?

- Return a result from a task

- Using Task() without priority argument

- API

Why do tasks exist?

Before structured programming, control flow used global state and jumped all over using goto. It was hard to follow because it lacked local reasoning: the ability to figure out the code in front of your eyes without reading elsewhere.

Similarly multithreaded code is unstructured:

- GCD blocks can’t express dependencies between them.

- Code is all over the place: multiple paths, hard to read, verbose handling, must call completion only once.

Because of this, the compiler can’t analyze our code. This changes with async functions and tasks. In short:

- async functions simplify asynchronous code

- tasks express relationships between work items

Return a result from a task

import Foundation

Task {

// to access a value returned use `value`

print(await Task(operation: { 0 }).value) // 0

// to access a value or error use `result`

struct MyError: Error {}

switch await Task(operation: { throw MyError() }).result {

case .success(let int): print(int)

case .failure(let error): print("\(type(of: error))") // MyError

}

// in a Task Result, failure is error or never

let _: Result<Int, Never> = await Task(operation: { 0 }).result

let _: Result<Int, Error> = await Task(operation: { throw MyError() }).result

// is the parent Task cancelled?

// (btw, it’s a good idea to cancel tasks on viewWillDisappear or so)

if Task.isCancelled {

return

} else {

// expensive computation

}

}

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 1) as Date

)An interesting use case is that waiting on a task.value let us share computation results. For instance, let say you store the task reference for an ongoing network request, then you request the same endpoint while the first one is ongoing. You don’t need to repeat the request, just await on firstTask.value and you’ll be reading the result. Is this confusing? check this annotated example.

Using Task() without priority argument

Using Task() without specifying a priority argument can lead to unintended consequences, as it inherits the priority of the code that created it.

For example, if you spawn multiple Tasks from the main thread, they will all inherit com.apple.root.user-initiated-qos.cooperative and compete with your UI code for CPU resources. This can result in:

- UI hiccups or stuttering.

- Slower overall code execution.

- Potential deadlocks when tasks depend on each other.

- iOS suspending some tasks to avoid exceeding the number of device cores.

To prevent these issues, specify the priority using, for example, Task(priority: .medium).

If deadlocks persist, the talk Swift concurrency: Behind the scenes introduced a flag LIBDISPATCH_COOPERATIVE_POOL_STRICT=1. This flag limits the simulator to one concurrent task per priority, ensuring progress. However, it deviates further from actual device behavior, which allows multiple tasks per priority.

API

Task.sleep()suspends the task, not the thread.task.isCancelledreturns a boolean, doesn’t throw.task.checkCancellation()throws if task is cancelled.task.result or task.valuereads the value returned (if any).

Cancellation Propagation:

- Cancelling a parent task cancels its children (created with Task {})

- Cancelling does NOT cancel detached tasks (Task.detached {})

- Cancelling a task consuming an AsyncStream does NOT automatically cancel the producer - use onTermination

- Always check Task.isCancelled or use checkCancellation() in loops

TaskGroup

TaskGroup is a container designed to hold an arbitrary number of tasks.

let result = await withTaskGroup(of: Int.self) { group -> Int in

group.addTask { ... }

group.addTask { ... }

return await group.reduce(0, +)

}

print(result)When a TaskGroup is executed, all added tasks begin running immediately and in any order. Once the initialization closure exits, all child tasks are implicitly awaited. If a task throws an error, all tasks are canceled, the group waits for them to finish, and then the group throws the original error.

See also: example 1, example 2.

Example

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

import Foundation

Task {

let values = [1, 3, 5, 7]

let sum = await withTaskGroup(of: Int.self) { group -> Int in

for value in values {

group.addTask { value }

}

return await group.reduce(0, +)

}

print(sum)

}

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 2) as Date

)

- line 5:

withTaskGroup(of: Int.self)creates a group with return type Int. - line 6: Iterates through the values, adding an arbitrary number of tasks, each returning an Int.

- line 9: Awaits the completion of all tasks (imagine they are slow) and sums the results.

If there were 400 values to process, the number of active tasks would likely be limited to the machine's number of cores, although this is not customizable or guaranteed.

Example 2: Map Reduce

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

import UIKit

import XCTest

struct MapReduce

{

static func mapReduce<T,U>(inputs: [T], process: @escaping (T) async throws -> U ) async throws -> [U] {

try await withThrowingTaskGroup(of: U.self) { group in

// map

for input in inputs {

group.addTask {

try await process(input)

}

}

// reduce

var collected = [U]()

for try await value in group {

collected.append(value)

}

return collected

}

}

}

final class PhotosTests: XCTestCase

{

func testDownloader() async throws {

let input = [

URL(string: "https://placekitten.com/200/300")!,

URL(string: "https://placekitten.com/g/200/300")!

]

let download: (URL) async throws -> UIImage = { url in

let (data, _) = try await URLSession.shared.data(from: url)

return UIImage(data: data)!

}

let images = try await MapReduce.mapReduce(inputs: input, process: download)

XCTAssertNotNil(images[0])

XCTAssertNotNil(images[1])

XCTAssertEqual(images.count, 2)

}

}

Sendable

Sendable is a marker that indicates types that can be safely transferred across threads. There is a Sendable marker protocol and a @Sendable function attribute.

final class Counter: Sendable { ... }

@Sendable

func bar() { ... }See also:

- What is thread-safety?

- What is a marker protocol?

- What types are thread-safe? (sorry, long list)

- Compiler checks on Sendable

Thread safety

Thread-safe code is code that remains correct when called from multiple threads.

Correct code is code that conforms to its specification. A good specification defines

- Invariants constraining the state.

- Preconditions and postconditions describing the effects of the operations.

For thread-safe code this means:

- No sequence of operations can violate the specification. That is, see the object in an invalid state or violate the pre/post conditions.

- Invariants and conditions will hold during multithread execution without requiring additional synchronization by the client.

- Immutable.

- Mutable private.

- Mutable shared (aka public).

- Prevent access.

- Make the state immutable.

- Synchronize the access.

- liveness

- deadlock: two threads block permanently waiting for each other to release a needed resource.

- livelock: a thread is busy working but it's unable to make any progress.

- starvation: a thread is perpetually denied access to resources it needs to make progress.

- safe publication: both the reference and the state of the published object must be made visible to other threads at the same time.

- race conditions: a defect where the output is dependent on the timing of uncontrollable events. In other words, a race condition happens when getting the right answer relies on lucky timing.

Marker protocol

A marker protocol is one with these traits:

- It indicates an intent.

- It has no requirements.

- It can’t inherit from a non-marker protocol.

- It can’t be used with is or as?.

Conforming a type to a marker protocol maintains binary compatibility. Obviously, source compatibility is broken if the marker protocol wasn’t previously known.

Thread-safe types

Thread-safe Types: structs, enums, tuples of safe types, actors, immutable classes, internally-synchronized classes, and functions/closures whose capture list (if any) contains safe types.

Not Thread-safe: reference types with unsynchronized mutable state, or value types that point to them, or functions that capture them.

Thread-safe types which have automatic conformance to Sendable

- Nearly every standard library type with value semantics with few exceptions: ManagedBuffer, pointer types, types returned by lazy algorithms.

- Error, because by throwing, any function sends the error across concurrency domains.

- Tuples whose elements are Sendable.

- Metatypes, because they are immutable.

- Key paths literals when they only capture values of Sendable types.

- Actors because they have internal synchronization.

- Closures and functions

- Classes

- Classes marked with @unchecked that provide their own synchronization.

- Other classes that fulfill all these conditions:

- They are final

- All properties are immutable Sendable types

- They are root classes or inherit directly from NSObject

- Generic values (if any) are guaranteed to be of Sendable type (e. g. <T:Sendable>)

- Structs that fulfill the following:

- All members are Sendable.

- Generic values (if any) are guaranteed to be of Sendable type (e. g. <T:Sendable>).

- They are either frozen public structs or non-public structs that are not @usableFromInline

- Enums that fulfill the following:

- Their associated values are Sendable. If they are generic, the generic must be defined as <T:Sendable>.

- They are either frozen public enums or non-public enums that are not @usableFromInline

- Some C types

- C enum.

- C struct if all properties conform to Sendable.

- C function pointers (because they cannot capture values).

Compiler checks on Sendable

For Sendable the compiler checks the following:

- Non-thread-safe types that inherit from Error are Sendable but shouldn’t be. The compiler will issue warnings for those to ease the transition.

- It is a compiler error to mark Sendable on an object that isn’t. But this error can be suppressed by adding

@uncheckedfor classes that use internal synchronization to guarantee thread safety. Example:

final class Foo {} // OK

class Foo: Sendable { } // error

class Foo: @unchecked Sendable {} // OKFor @Sendable the compiler checks the following:

- No mutable captures.

- Cannot be both synchronous and actor isolated –this would allow the closure to be sent to another thread and run code on the actor (because it is actor isolated).

- Captures must be of Sendable type.

- Accessors are currently not allowed.

- Closures that have @Sendable function type can only use by-value captures. Example:

import Foundation

func count() {

var n = 0

@Sendable func increment() {

n += 1 // Error: Mutation of captured var 'n' in concurrently-executing code

}

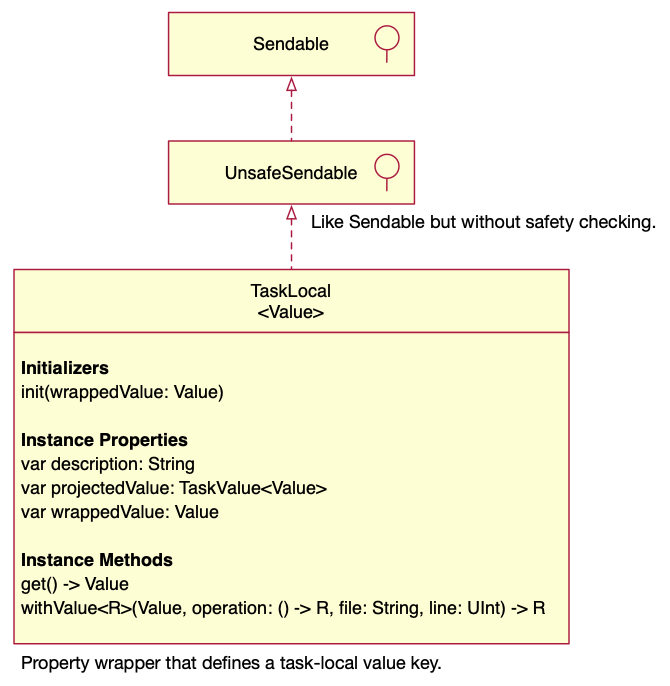

}@TaskLocal

A task local value is a Sendable value associated with a task context. They are inherited by the task’s children. When the root task context ends, the value is discarded.

To declare a property as task-local add static and annotate with @TaskLocal:

enum Request {

@TaskLocal static var id = 0

}To get/set a task local value

print(Request.id) // 0

Request.$id.withValue(Request.id + 1) {

print(Request.id) // 1

}See also:

- Where can I use them?

- API

Where can I use them?

- a

Task {}orTask.detached {} - or any of their Task children, even a child within a nested group

- an async function –because it runs in a task internally,

- a sync function –because it emulates task local values with a thread-local implementation,

- a function declared outside but called from within the context

import Foundation

enum Request {

@TaskLocal static var id = 0

}

Task {

await withTaskGroup(of: String.self) { group in

print(Request.id) // OK

Request.$id.withValue(Request.id + 1) {} // ABORT signal

}

}

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 2) as Date

)API

Actors

An actor is a reference type with mutual exclusion –meaning: only one call to the actor is active at a time. This is also known as actor isolation.

This is implemented using a serial executor which serializes calls from inside or outside the actor. Calls inside the actor run uninterrumpted to their end so they are synchronous. Calls from other types may need to wait for their turn so they must be asynchronous.

nonisolated is a keyword that disables isolation on selected methods and variables. It is allowed as long as nonisolated code doesn’t interact with isolated code.

actor Counter

{

// this is safe to mark nonisolated since it is a constant

nonisolated let name = "my counter"

var count = 0

func incrementAge() -> Int {

age += 1

return value

}

}See also: Priority inversion.

Priority inversion

Priority inversion is a low priority task holding the progress of a high priority task. For instance, because both need to access a resource guarded with mutual exclusion, e.g. another actor. This is not a problem with actors, let’s see why.

Actor hopping is calling an actor from another actor. This is allowed as long as they read an immutable property or invoke an asynchronous function.

An actor’s function runs uninterrupted (because of mutual exclusion) until it invokes an async function of another actor. At that point, it is suspended. When execution resumes, there is a chance that the actor executed other calls that changed its state, so the rest of the function shouldn’t rely on pre-existing actor’s state.

Actor reentrancy is scheduling work on an actor that already has suspended work items. Whenever the actor has several work items suspended, it is free to reorder their execution. Being able to reorder pending work avoids priority inversion.

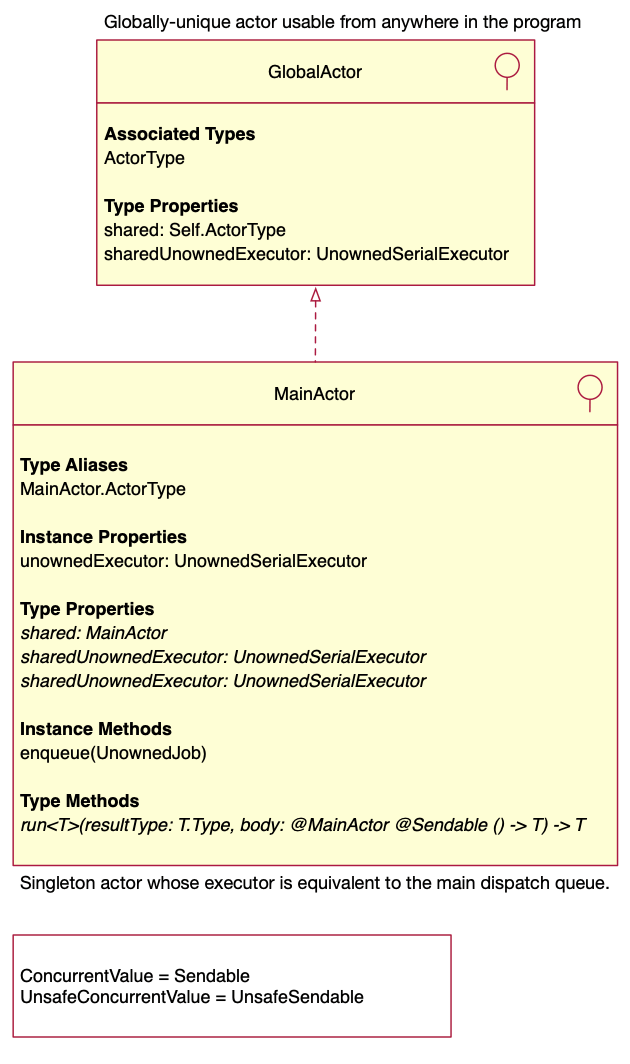

Global actors

A global actor is a singleton actor available globally. It can be used to isolate a whole object, individual properties, methods, and protocols.

import Foundation

// declaration of a custom global actor

@globalActor

actor MyActor {

static let shared = MyActor()

/// Private initializer to ensure singleton pattern

private init() {}

}

// isolation of a whole object

@MyActor

final class SomeClass {

// opting out on selected members

nonisolated let foo = "bar"

}

// isolation of individual properties and methods

final class SomeOtherClass {

@MyActor var state = 0

@MyActor func bar() {}

}

@MyActor

protocol FooProtocol {

fuc foo()

}

@MyActor

final class FooClass: FooProtocol {

fuc foo()

}One such actor is the @MainActor, which is an attribute that indicates code (a function, property, or a whole type) should run on the main thread. This eliminates the guesswork about whether code should run on the main thread.

Running MainActor.run from an async context is the equivalent to GCD’s DispatchQueue.main.async:

Task {

await MainActor.run {

// same as DispatchQueue.main.async { .. }

}

}In a function annotated @MainActor, any further async calls don’t block the main thread. For instance:

@MainActor

func fetchImage(for url: URL) async throws -> UIImage {

// doesn’t block main

let (data, _) = try await URLSession.shared.data(from: url)

// image decompression does block main

guard let image = UIImage(data: data) else {

throw ImageFetchingError.imageDecodingFailed

}

return image

}See also:

- API

- Global actor inference

- Minimize hoping on main

- How to run fully / on main

API

Global actor inference

- A class that inherits from a @MainActor superclass.

- A method overriding a @MainActor method.

- A struct or class using a property wrapper with @MainActor for its wrapped value.

- A method that implements a protocol that declares that method as @MainActor. There is an exception where this doesn’t happen: a method implemented in an extension that does not declare conformance to the protocol. See example below.

protocol Configurable {

@MainActor func configure()

}

struct Cell: Configurable {}

extension Cell { // this extension doesn’t declare conformance

func configure() // not main actor

}

struct Cell {}

extension Cell: Configurable { // this extension declares conformance

func configure() // main actor

}

- A type that implements a protocol that declares @MainActor for the whole protocol. There is an exception where this doesn’t happen: a type that adds conformance in an extension. See example below.

@MainActor

protocol Configurable {}

// main type

struct Cell: Configurable {}

// not main type

struct Cell {}

extension Cell: Configurable {}

These rules imply that if we create an async context and access an object annotated with @MainActor (e.g. a UILabel) the call will automatically be routed through the main thread. However, this is only true if we are in an async context.

For instance, in the following code let uilabel be a UILabel, that is now declared with @MainActor in UIKit.

func f() {

Task {

uilabel.text = "..." // OK, done on main

}

DispatchQueue.global(qos: .background).async {

uilabel.text = "..." // Error!

}

}Minimize hoping on main

Structured concurrency runs on “cooperative thread pools”, which seem to be dispatch queues. The main actor is an exception because it runs on the main thread. For instance,

Task(priority: TaskPriority.background, operation: {

await MyMainActor.shared.f(value: 0)

})creates two threads

Thread 1 Queue : com.apple.main-thread (serial)

Thread 2 Queue : com.apple.root.background-qos.cooperative (serial)The cooperative pool reuses threads for actors, async functions, etc. But calls to the main actor require a context switch to the main thread, which could decrease your FPS.

As Apple said in #10254 Behind the scenes

You should weigh the entertainment value of incremental updates (multiple switching) against the efficiency of batching up work (one switch).

How to run fully / on main

If you have a code path that you want to

- Execute fully (without reentrant calls): put it inside an actor and don’t spawn additional async calls.

- Execute on main: annotate it with @MainActor and don’t spawn additional async calls to code not protected by @MainActor.

Do you know why?

- An actor prevents parallel execution (two active threads running the same code at the same time), not concurrent execution (two threads running the same code one at a time).

- @MainActor guarantees that code runs in main, but async calls to unprotected code MAY suspend your @MainActor code, run the unprotected code on a thread different than main, and cause a reentrant call to your @MainActor code.

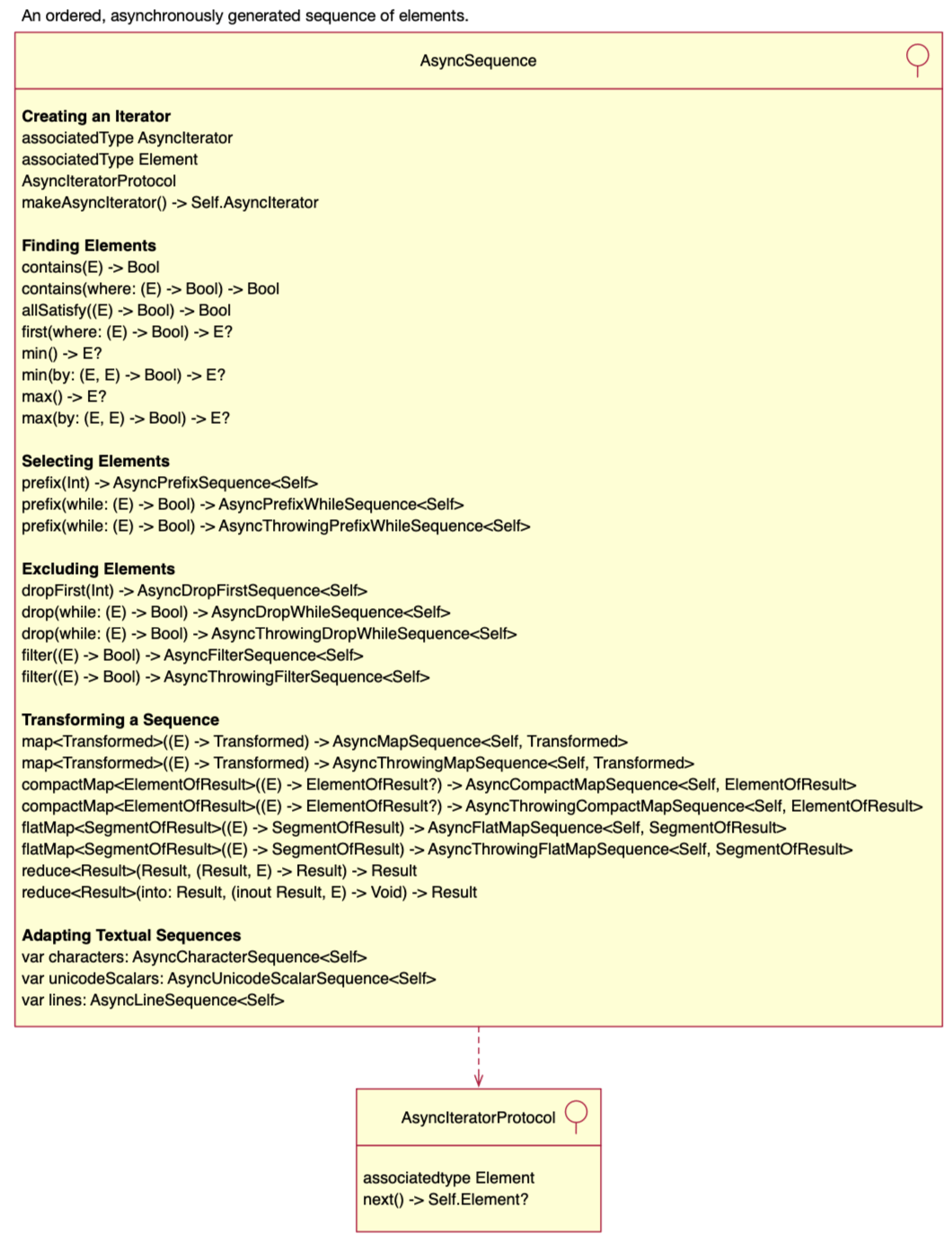

AsyncSequence

AsyncSequence is an ordered, asynchronously generated sequence of elements. Basically, a Sequence with an asynchronous iterator.next(). Example, API.

Many library objects now return async sequences:

let url = URL(string: "http://google.com")!

let firstTwoLines = try await url.lines.prefix(2).reduce("", +)Example

import Foundation

private enum Source {

case local

case remote

case terminated

}

final class CollectionSequence: AsyncSequence, AsyncIteratorProtocol {

let backend: BackendService

let persistence: CollectionContainer

init(backend: BackendService, persistence: CollectionContainer) {

self.backend = backend

self.persistence = persistence

}

private func localCollections() async throws -> [Collection] {

try await persistence.collections()

}

private func remoteCollections() async throws -> [Collection] {

let dtos = try await backend.perform(operation: .getAllCollections())

try await persistence.save(model: dtos)

return try await localCollections()

}

private var source = Source.local

// MARK: - AsyncSequence

typealias Element = [Collection]

func makeAsyncIterator() -> CollectionSequence {

self

}

// MARK: - AsyncIteratorProtocol

func next() async throws -> Element? {

switch source {

case .local:

source = .remote

return try await localCollections()

case .remote:

source = .terminated

return try await remoteCollections()

case .terminated:

return nil

}

}

}

for try await value in CollectionSequence() {

print("collections: \(value)")

}

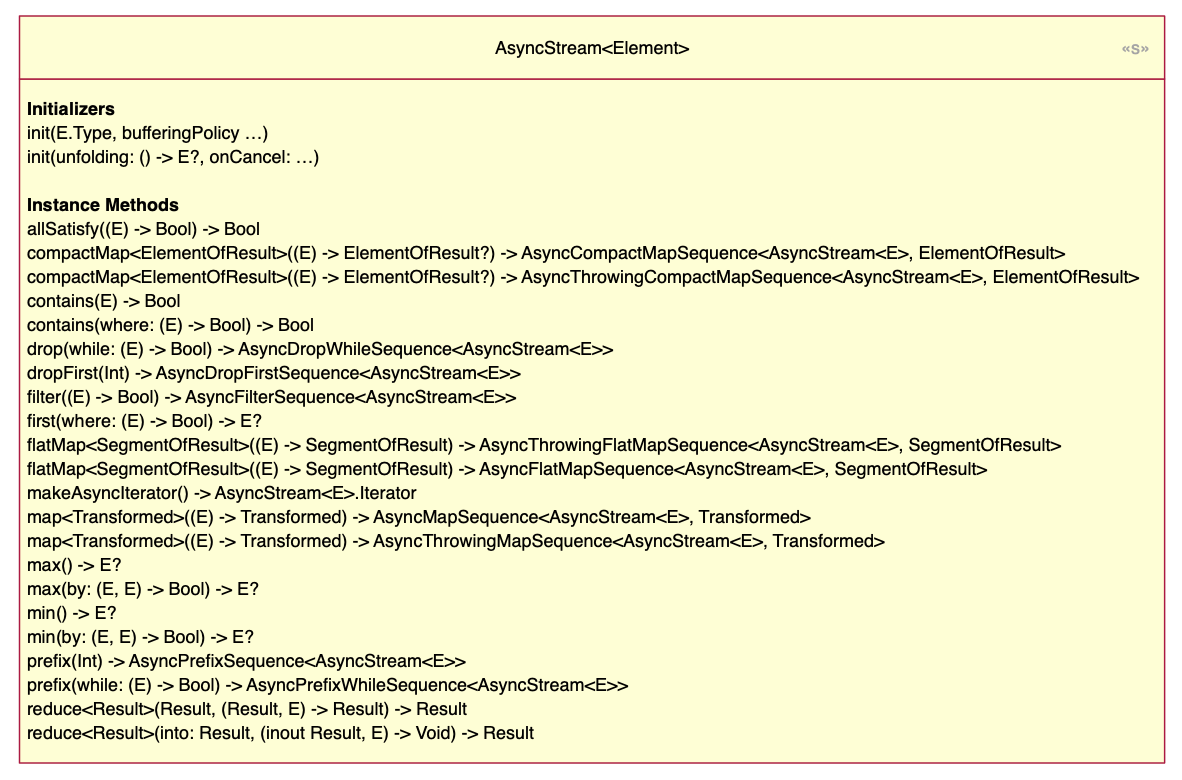

API

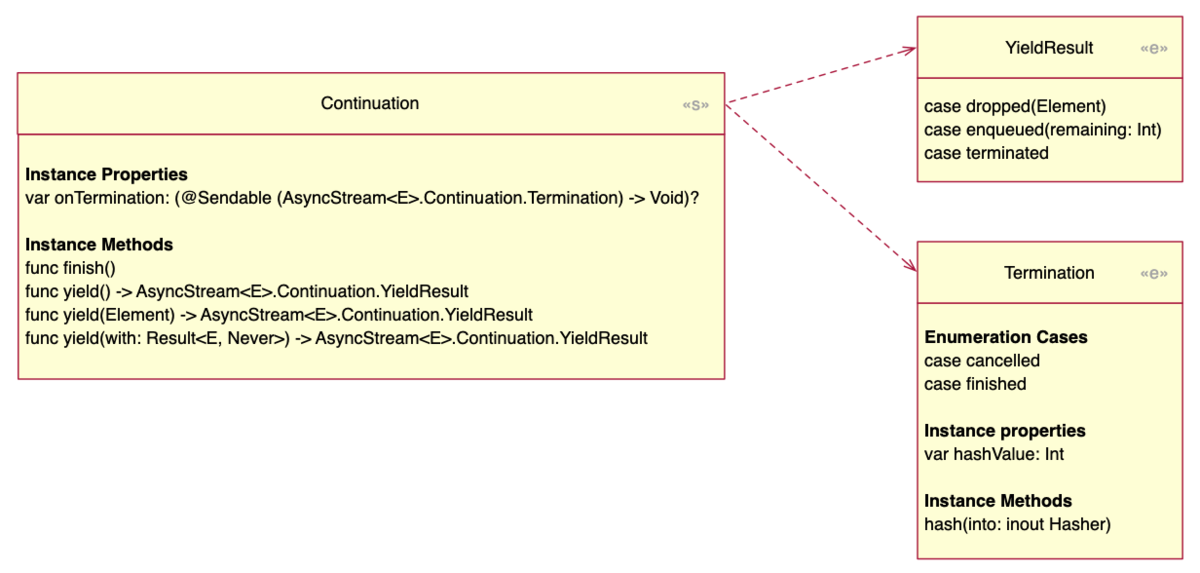

AsyncStream is an asynchronous sequence generated from a closure that calls a continuation to produce new elements. Example, API. Things of note regarding AsyncStream:

- Iterating over an AsyncStream multiple times, or creating multiple iterators is considered a programmer error.

- The closure used to create the AsyncStream can’t call async code. If you need to await, use AsyncSequence instead.

- If your code can throw, use AsyncThrowingStream instead.

- Producer tasks must be explicitly cancelled via onTermination handler</b>.

Example

import Foundation

let stream = AsyncStream(Int?.self) { continuation in

continuation.onTermination = { @Sendable reason in

print("Termination reason: \(reason)") // finished or cancelled

}

for n in [0, nil, 2] {

// ... imagine a slow async process returning 'n'

continuation.yield(n)

}

continuation.finish()

}

Task {

for await value in stream {

print(value as Any) // 0, nil, 2

}

}

RunLoop.current.run(

mode: RunLoop.Mode.default,

before: NSDate(timeIntervalSinceNow: 2) as Date

)Iteration ends when iterator.next() either throws or returns nil.

When iterating a collection of optionals the equivalent of nil is Optional.none, not Optional.some(nil). For instance, in this example next() returned:

.some(0).some(nil).some(2).none–marks the end so the loop body is not executed

API

Cancellation Handling

When creating AsyncStream/AsyncThrowingStream with a Task producer, the producer must be cancelled explicitly or it will continue running even after the consumer is cancelled.

// Problem: Producer continues after consumer cancels

func updates() -> AsyncStream<Update> {

AsyncStream { continuation in

Task {

// This task keeps running forever!

while true {

try? await Task.sleep(for: .seconds(1))

continuation.yield(Update())

}

}

}

}

// Solution: Producer cancels when consumer cancels

func updates() -> AsyncStream<Update> {

AsyncStream { continuation in

let producerTask = Task {

while !Task.isCancelled {

try? await Task.sleep(for: .seconds(1))

continuation.yield(Update())

}

continuation.finish()

}

// Cancel producer when stream terminates!

continuation.onTermination = { @Sendable _ in

producerTask.cancel()

}

}

}

Why this happens: The Task consuming the stream and the Task producing values are separate. Cancelling the consumer doesn't automatically cancel the producer - you must connect them via onTermination.

Testing tip: Don't use Task.sleep timing in tests - it creates race conditions. Instead, cancel at specific checkpoints:

// Test cancellation deterministically

let task = Task {

for await update in updates() {

if update.isTargetUpdate {

throw CancellationError() // Cancel at exact point

}

}

}Note that in the example above, CancellationError is a Swift type that can be thrown to indicate task cancellation. If you throw any other error, the task still exits, but it won’t be treated as a cancellation — it’s treated as a regular failure.

Common mistakes:

- Forgetting to store the producer Task reference

- Not using `onTermination` handler

- Not checking `Task.isCancelled` in producer loops

- Testing with sleep/timing instead of deterministic conditions

Preparing for Swift 6

The article Concurrency in Swift 5 and 6 talks about restrictions that will be applied in Swift 6. To enable some of them pass these flags to the compiler:

OTHER_SWIFT_FLAGS: -Xfrontend -warn-concurrency -Xfrontend -enable-actor-data-race-checksOr from SPM add this to your target

swiftSettings: [

.unsafeFlags([

"-Xfrontend", "-warn-concurrency",

"-Xfrontend", "-enable-actor-data-race-checks",

])

]Sometimes the solution is straightforward, e.g. use weak self to refer to controllers, e.g. add mutated objects to the capture list, etc. Some errors are a pain in the ass, e.g. Data is not Sendable so you can’t pass it around and it may be too expensive to copy.

These flags may or may not be useful. They

- reveal some errors that may pass undetected in the default compiler

- force you to consider edge cases in your code

- are at times too restrictive to be taken seriously

According to Doug Gregor:

To be very clear, I don’t actually suggest that you use -warn-concurrency in Swift 5.5. It’s both too noisy in some cases and misses other cases. Swift 5.6 brings a model that’s designed to deal with gradual adoption of concurrency checking.

See also: Staging in Sendable checking.

Testing

Not much is new. From XCTestCase:

If your app uses Swift Concurrency, annotate test methods with async or async throws instead to test asynchronous operations, and use standard Swift concurrency patterns in your tests.

For instance

class MyTests: XCTestCase

{

override func setUp() async throws { ... }

func testMyCode() async throws { ... }

}However, class methods seem to be not supported. Using NSLock or os_unfair_lock_lock primitives inside are ignored. I guess you could use a runloop and check for a side effect but it’s too ugly.

// not supported

override class func setUp() async throws { ... }See also

- Proposals implemented in Swift 5.5

- Swift Standard Library > Concurrency

- Swift evolution

- WWDC sessions on Swift concurrency

| SE-0296 | Async/await |

| SE-0298 | Async/Await: Sequences |

| SE-0300 | Continuations for interfacing async tasks with synchronous code |

| SE-0302 | Sendable and @Sendable closures |

| SE-0306 | Actors |

| SE-0311 | Task Local Values |

| SE-0314 | AsyncStream and AsyncThrowingStream |

| SE-0316 | Global actors |

| SE-0317 | async let bindings |

| 2021 | #10132 | Meet async/await in Swift |

| 2021 | #10133 | Protect mutable state with Swift actors |

| 2021 | #10134 | Explore structured concurrency in Swift |

| 2021 | #10017 | Bring Core Data concurrency to Swift and SwiftUI |

| 2021 | #10019 | Discover concurrency in SwiftUI |

| 2021 | #10158 | Meet AsyncSequence |

| 2021 | #10194 | Swift concurrency: Update a sample app |

| 2021 | #10095 | Use async/await with URLSession |

| 2021 | #10254 | Swift concurrency: Behind the scenes |